Table II shows how students read the postings to the discussion forum. The result shows a lot of students were silent readers. 55.3% read between 6 and 234 items in the discussion thread. 24% did not read anything, which is about the 21.3% that did not post any thing. The discussion forum was probably not fully utilised because there were no marks allocated to student’s contribution to the forum. There was therefore no motivation for the students to use the forum.

Table II: Analysis of student reading of discussion forum

Assignment

Self-Assessment Tests

Table III: Use of Self-tests

There were no scheduled times to use the self test. It was not compulsory. Students had to do it at their own convenience. This was probably not fully utilised due to lack of enough computing resources.

STUDENTS’ VIEWS OF COURSE At the end of the course during the last laboratory session, online questionnaires were administered to students, 477 students responded to this questionnaire. A focus group discussion was done with eight (8) randomly selected student volunteers by the Educational Technology Unit, the department coordinating eLearning for the University. A look at the quantitative data in the online questionnaire revealed that 67.9% agreed that it was easy to navigate, 56% felt the design of the course was ‘just Ok’ and 39.8% really liked the design of the course. 60% of students felt that the amount of material in the course was just enough, while 35% felt it was too much. 93.1% felt the presentation was very useful and 78.4% agreed that it met their learning needs. 92% agreed that it made the course better. 57.4% of the students said that the tutors were helpful. Students were asked what they liked and what they did not like about the online course. The responses were then re-categorised and results are as shown in tables IV and V. The results show that students liked a whole range of things about the course. About 8% said they liked everything about the course. The students liked the contents best. The other things that stood out in what they liked include email, practical laboratory session and the self-assessment questions. Table IV: What students liked about the course

About 45% did not find any fault with the course. There were also a whole range of things

Table V: What students did not like about the course

The report from the focus group discussion showed that students agreed that the WebCT part of the course helped a lot. WebCT was found to be very user friendly and easy to navigate. It made interesting by the practical sessions, it assisted students in other courses because they were able to type other assignments. Overall they feel they were comfortable with computers and have learnt a lot. Difficulties encountered included the fact that time was not enough, computers were not enough for further practice, most students don’t pay attention in class because they know they would get notes on WebCT, and WebCT outside UB is very slow to open . Students confirmed that they did get enough support from the lecturer. They were able to communicate using email, though not as often as they would have wanted. This is due to the limitations in the number of computers available. They however requested that laboratory demonstrators should have more patience for the sake of those who had no previous experience in computing.

ANY RELATIONSHIP WITH FINAL ASSESSMENT? At the end of the semester, a final examination was given which was a set of multiple-choice questions. This result and two assignments make up the overall course results. Overall, 94.6% of the students passed. The results as shown in Table VI show that there is a very high correlation between the course results and the number of pages visited, the number of hits as well as the visits to the discussion forum.

Table VI: Correlation of overall course result with various variables

** Correlation is significant at the 0.01 level (2-tailed).

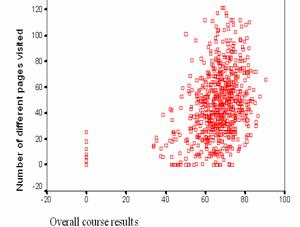

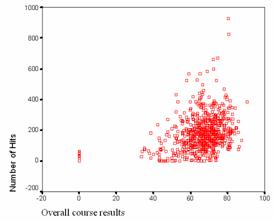

In comparing the final examination result with the number of hits and pages visited, a scatter diagram was drawn as shown in Figure II.

Figure II: Relationship of use with overall course results

This confirms that the exam marks are closely related to how many sites were visited, how many times the student used the site, as well as how well he visited the discussion group. The self-test module and postings done had no significance to the overall course result. This is likely because the self test module was not well used by the students (See table III). Berger (n.d.) however, argues that this correlation might not mean anything, that the real independent variable is probably motivation and the lasting effect on student overall performance which is hard to determine in this study.

PROVISION AND SUPPORT What makes WebCT work more than anything is the support (Miller 1999). Support is crucial every step of the way. Educational technology department dealt with student accounts to use with the software package. The Faculty Information Technology Unit supported the computers. Laboratory Demonstrators were there during laboratory session to assist students.

EXPERIMENT RESULTS Advantages

Luckily, out of the five laboratory demonstrators, three had taught without eLearning the previous year. An interview with this three to find out what they felt about the use of eLearning showed they were very enthusiastic. They felt the students had a better grasp of the course and could use the computers more comfortably than students from the previous year. They also confirmed that they were able to manage the laboratory better with fewer questions, this they attributed to the fact that the students had the instructions in front of them. Course Assessment was also another area that was quite beneficial. Collection was better and easier through electronic submission. We eliminated the problem of missing scripts. Marking was more thorough. It was easier for us to detect student mistakes in the electronic version which we would not have detected in the paper copies – for example when students were asked to use the header/footer feature in Microsoft Word, we could detect if this was used or if they simply typed what they were asked to do at the top of the page. It was also easy for us to give a detailed feedback to the students through the system. Each student when they check their scores will find a detail on how the work was marked by section, and also comments on what mistakes they made. We had a template which we copy and paste to each student’s remark section and then fill it in for that particular student. It was almost an impossible task with the manual marking; it was also a difficulty distributing the paper back to the students. It also provided the advantage that there was to time spent having to record 750 student’s assessment, which is also error prone in that some student’s scripts were accidentally skipped in the past. This was done into the system as the marking was done and at the end we could export the marks into an Excel Spreadsheet. Overall, I felt satisfied with the course. Students used the materials extensively. They learnt more skills than were required in the syllabus. I could sense I was dealing with confident students. This is quite different from previous years where some students will complete the course and still have ‘techno-phobia’. Within a few weeks students had learnt to switch between screens and use the eLearning materials as their reference. I also had many appreciative students who sent comments about the course through e-mail. This is also in line with Berger (n.d.) finding when he experimented with web-based material to support a large class. He concluded he had a better rapport with his class; he was able to easily respond to student questions, there was strong correlation between web use and final marks. Disadvantages Solving the problem of missing scripts also created another problem with students submitting wrong files and blank files. This created some management problems of allowing students to re-submit and in some cases, it was after the student results were out that they realized that they got a zero (0) in the assignment. Such students were allowed to re-submit and the dates the files were created checked that it was not beyond the deadline. Though the electronic assessment had its own benefit, it actually needed more time to mark than the paper version. This is partly because of the added time it takes to open and close a file. There are also additional things to check, for example the view the student saved the file will determine if you would see the header and footer. This might mean changing the view before marking. Other things that could have added to the time included the individual comments given on each marked script as well as some students not following instruction. As a backup, in the event WebCT goes down, there is a paper version that could be photocopied for the students. This fortunately happened only with one group, once through the semester. So it was easy to organise a make-up for the group. Also, when students submitted assignments, a back-up was downloaded on a stand- alone machine in case there is a network problem during the marking period. This actually occurred, but it was for a short period, and so we did not have to use the back-up copy.

CURRENT SITUATION The use of eLearning in the course did provide great help. The same course is now been used for August to December 2004 for a new batch of students. Due to its usefulness, it has also been adopted by all faculties in the University. The current population using it is over 3000 students. CONCLUSIONS eLearning is no substitute for what is done in lectures but is a very useful support tool. The used of eLearning for the class did support the views in literature on the benefits found in literature about large classes. Some advantages it provided were based on the fact that activities are independent of time and place. This created greater freedom for students. Apart from during classes, they could log in any other time and examine their course and laboratory session as well as take part in any available online discussion. WebCT also provided the ability to track assignments submitted and assessed. It also provided a way of easy feedback for the students. Other advantages from using the course included more interaction with the students through email and online discussion. It was easy to manage students’ assignments. There was also enough evidence that students felt it added value to the course. There was also a strong correlation between its use and overall course results. Although it provided benefits, it is apparent that to use it students need to have access to computers where they could have access to the materials. There is therefore the need to ensure a reasonable student to computer ratio. There is also the need for provision of adequate technical support for it to be successful. There should be adequate support staff for hardware, software and training. There is need to have a ‘back-up’ plan for delivery as well as assessment in case there is a problem with the system. This could include provision of paper copies for laboratories or having off-line versions on compact disk for delivery, as well has off-line versions of the assessment, in case there is a network failure. Assessments should be well planned. There should be a ‘back up’ plan for students who submitted wrong copies or blank files. There should also be enough more time allocated to the marking of assessment to allow a detailed feedback to the student. Discussion groups should be well planned and probably carry some marks in the overall course result to motivate students to use it. Provision could be made during laboratories to allow students to fully utilize the self assessment tools. REFERENCES Berger, J.D. n.d. "Experimenting with Web-based Material to Support Large Lecture Course", available at: (http://www.tag.ubc.ca/facdev/services/registry/ewwbmtsallc.html) (Accessed September 1, 2004) Douglas, P. & McNamara, R. (2002). "Using computer technology as an aide to teaching large classes" available at http://www.bond.edu.au/bus/research/02-06McNamara.pdf (Accessed September 1, 2004) Eyitayo, O.T. & Giannini, D. (2004). "Instructional Support and Course Development for Computer Literacy Course: Using Anchored Instruction". In Procceedings of IASTED International Conference on Web-Based Education, pp 206-21, February 16-18, 2004, Innsbruck, Austria. (ISBN: 0-88986-406-3). Larsen, L., (2000). "Enhance Large Classes with WebCT". Available at: http://www.oit.umd.edu/ItforUM/2000/Fall/webct/ (Accessed January 16, 2003). Lucas, G. & Hoffman, B. (1998). "Module 1: Strategies and Tactics for Online Teaching and Learning" Available at: http://www.edweb.sdsu.edu/T3/module1/Connect.htm (Accessed January 16, 2003). Moore, M.G. & Kearsley, G. (1996). Distance education: A systems view. Belmont, CA: Wadsworth Publishing Company. Morris, M.S. (2002). "Lessons learned from Teaching a Large Online Class teaching" Curriculum and Instruction 505, Spring 2002 Available at https://webct.ait.iastate.edu/ISUtools/webhtml/designer/community/morris.pdf (Accessed January 16, 2003). Miller, G. (1999). WebCT Responds to Campus Need for Online Teaching Resources (1999) Available at http://www.inform.umd.edu/UA/UnivRel/outlook/1999-02-16/feb-16-99/webct.html (Accessed September 1, 2004). The Pennsylvania State University (n.d.) Large Class FAQ: Technology http://www.psu.edu/celt/largeclass/faqtech.html#3.

Copyright for articles published in this journal is retained by the authors, with first publication rights granted to the journal. By virtue of their appearance in this open access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.Original article at: http://ijedict.dec.uwi.edu//viewarticle.php?id=109&layout=html

|